Parvez Ahammad on applying machine learning to security

The O’Reilly Security Podcast: Scaling machine learning for security, the evolving nature of security data, and how adversaries can use machine learning against us.

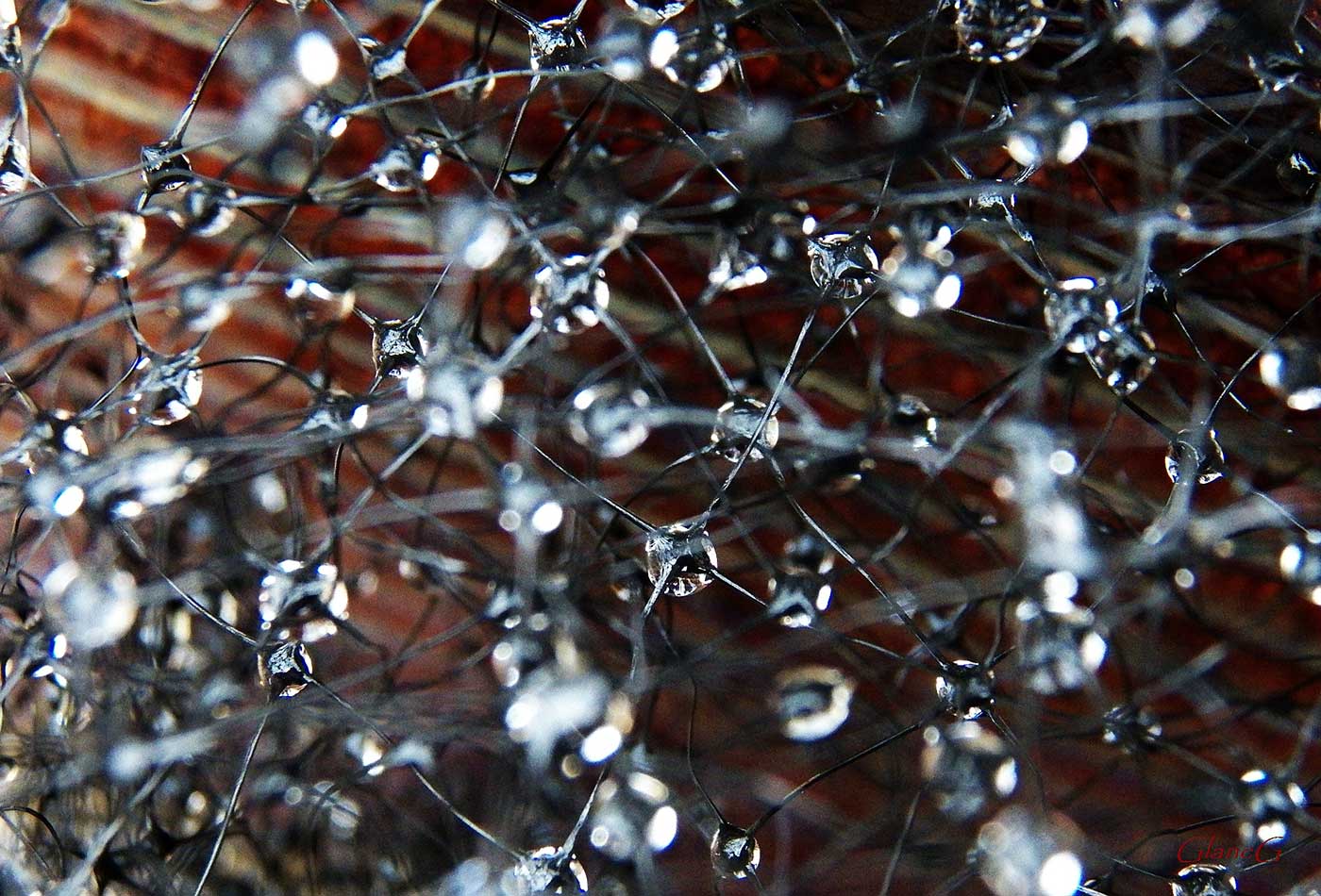

Complexity. (source: Photo fiddler on Flickr)

Complexity. (source: Photo fiddler on Flickr)

In this special episode of the Security Podcast, O’Reilly’s Ben Lorica talks with Parvez Ahammad, who leads the data science and machine learning efforts at Instart Logic. He has applied machine learning in a variety of domains, most recently to computational neuroscience and security. Lorica and Ahammad discuss the challenges of using machine learning in information security.

Here are some highlights:

Scaling machine learning for security

If you look at a day’s worth of logs, even for a mid-size company, it’s billions of rows of logs. The scale of the problem is actually incredibly large. Typically, people are working to somehow curate a small data set and convince themselves that using only a small subset of the data is reasonable, and then go to work on that small subset—mostly because they’re unsure how to build a scalable system. They’ve perhaps already signed up for doing a particular machine learning method without strategically thinking about what their situation really requires. Within my company, I have a colleague from a hardcore security background and I come from a more traditional machine learning background. We butt heads, and we essentially help each other learn about the other’s paradigm and how to think about it.

The evolving nature of security data and the exploitation of machine learning by adversaries

Many times, if you take a survey and see that most of the machine learning applications are supervised, what you’re assuming is that you collected the data and you think the underlying distribution of your data collection is true. In statistics, this is called stationarity assumption. You assume that this batch is representative of what you’re going to see later. You are going to split your data into two parts; you train on one part and you test on the other part. The issue is, especially in security, there is an adversary. Any time you settle down and build a classifier, there is somebody actively working to break it. There is no assumption of stationarity that is going to hold. Also, there are people and botnets that are actively trying to get around whatever model you constructed. There is an adversarial nature to the problem. These dual-sided problems are typically dealt in the game theoretic framework.

Basically, you assume there’s an adversary. We’ve recently seen research papers on this topic. One approach we’ve seen is that you can poison a machine learning classifier to act maliciously by messing with how the samples are being constructed or adjusting the distribution that the classifier is looking at. Alternatively, you can try to construct safe machine learning approaches that go in with the assumption that there is going to be an adversary, then reasoning through what you can do to thwart said adversary.

Building interpretable and accessible machine learning

I think companies like Google or Facebook probably have access to large-scale resources, where they can curate and generate really good quality ground truth. In such a scenario, it’s probably wise to try deep learning. On a philosophical level, I also feel that deep learning is like proving there is a Nash equilibrium. You know that it can be done. How it’s exactly getting done is a separate problem. As a scientist, I am interested in understanding what, exactly, is making this work. For example, if you throw deep learning at this problem and the thing comes back, and the classification rates are very small, then we probably need to look at a different problem because you just threw the kitchen sink at it. However, if we found that it is doing a good job, then what we need to do is to start from there and figure out an explainable model that we can train. We are an enterprise, and in the enterprise industry, it’s not sufficient to have an answer; we need to be able to explain why. For that, there are issues in simply applying deep learning as it is.

What I’m really interested in these days is the idea of explainable machine learning. It’s not enough that we build machine learning systems that can do a certain classification or segmentation job very well. I’m starting to be really interested in the idea of how to build systems that are interpretable, that are explainable—where you can have faith in the outcome of the system by inspecting something about the system that allows you to say, ‘Hey, this was actually a trustworthy result.’

Related resources:

Applying Machine Learning in Security: A recent survey paper co-written by Parvez Ahammad